Machine Learning 04: DNN, CNN, RNN, and Representation Learning

- Why not Logistic Regression?

- Multilayer Networks of Sigmoid Units

- Example: Learn probalistics XOR

- A Convolutional Neural Net for Handwritten Digit recognition: LeNet

- Convolution layer, Maxpooling layer

- Dealing with Overfitting by Validation Set

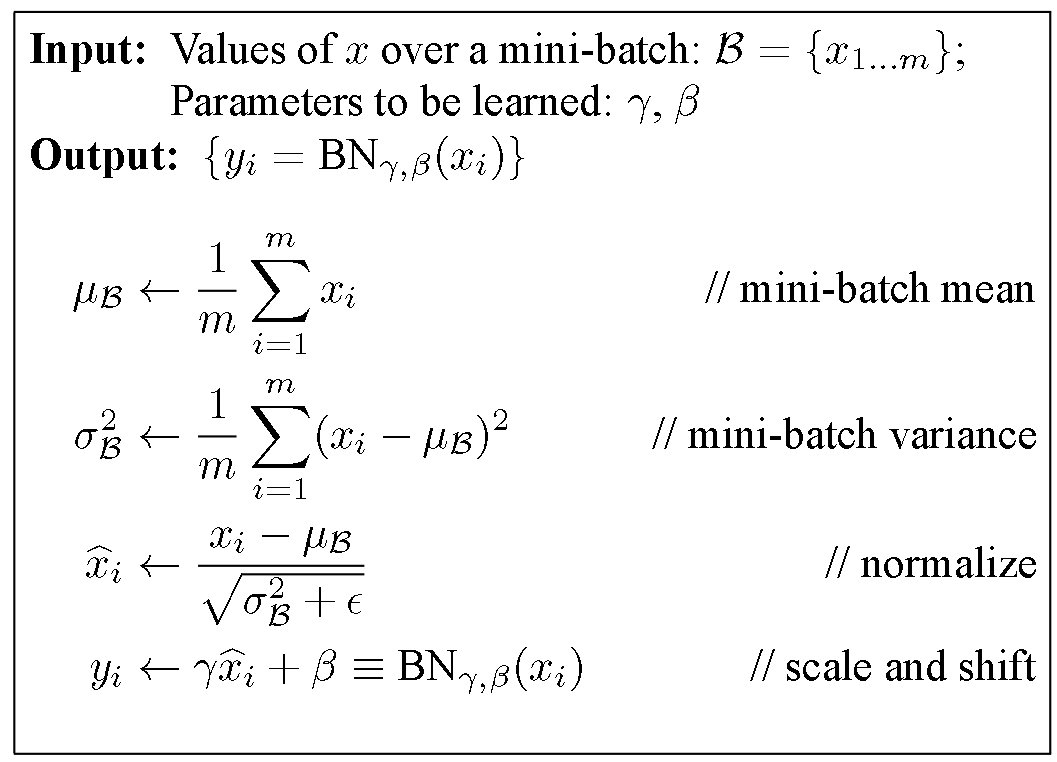

- Batch Normalization

- Learning Hidden Layer Representations

- Learning Distributed Representations for Words

- What is cross entropy?

- Recurrent Neural Networks

- Long Short Term Memory (LSTM) Unit

- Gated Recurrent Units (GRUs)

- Programming Frameworks for Deep Nets

Course Notes of Professor Tom Mitchell Machine Learning Course @ CMU, 2017

Why not Logistic Regression?

We like logistic regression, but:

- how would it perform when trying to learn P(image contains Hillary Clinton | pixel values X1, X2 ... X10,000)?

- what Xi image features to use? edges? color blotches? generic face? subwindows? lighting invariant properties? position independent? SIFT features?

Multilayer Networks of Sigmoid Units

E.g. 1 Speech Recognition

This is a multilayer networks of sigmoid units to do speech recognize. 1-hot vector encoding for the last layer to do classification into certain category. On the right side, we can see the decision surface is very complecated instead of linear surface of logistic regression.

E.g. 2 ALVINN self-driving car

[ ]

]

- 4 hidden units in the hidden layer

- fully connected, output units connecting to all hidden layer units

- One-hot encoding for the output 30 units in training data

Sigmoid Unit

Rectified Linear Unit (ReLU)

[ ]

]

Comparing with Sigmoid, the only difference is the activation function *f*. ReLU change the sigmoid function to thresholded output. Note that ReLU is still linear classifier!

Many types of parameterized units

- Sigmoid units

- ReLU

- Leaky ReLU (fixed non-zero slope for input<0)

- Parametric ReLU (trainable slope)

- Max Pool

- Inner Product

- GRU’s

- LSTM’s

- Matrix multiply

- .... no end in sight

Training Deep Nets

- Choose loss function J(θ) to optimize

+ sum of squared errors for y continuous: Σ (y – h(x; θ))^2

+ maximize conditional likelihood: Σ log P(y|x; θ)

+ MAP estimate: Σ log P(y|x; θ) P(θ)

+ 0/1 loss. Sum of classification errors: Σ δ(y = h(x; θ) — Not a good choice because not smooth

+ ...

- Design network architecture

+ Network of layers (ReLU’s, sigmoid, convolutions, ...)

+ Widths of layers

+ Fully or partly interconnected

+ ...

- Training algorithm

+ Derive gradient formulas

+ Choose gradient descent method, including stopping condition

+ Experiment with alternative architectures

+ Drop out

Example: Learn probalistics XOR

- Given boolean Y, X1, X2 learn P(Y|X1,X2), where

- P(Y=0 | X1 = X2) = 0.9

- P(Y=1 | X1 ≠ X2) = 0.9

- Can we learn this with logistic regression?

- No, it's not a linear problem.

- Draw the axis with x1, x2 here to see.

- What can we do?

- Add a hidden layer which gets the inputs from x1 and x2 and output to the y results.

Feedforward Process

Gradient Calculation with Chain Rule

Loss function to be minimized: negative log likelihood

J(theta) = E -logP(Y=y|X=x)

use chain rule:

In which:

Back Propagation Process

A Convolutional Neural Net for Handwritten Digit recognition: LeNet

Convolution layer, Maxpooling layer

A detailed guide of forward propagation and back propagation.

Dealing with Overfitting by Validation Set

Our learning algorithm involves a parameter n=number of gradient descent iterations

- Separate available data into training and validation set

- Use training to perform gradient descent

- n <— number of iterations that optimizes validation set error

This gives unbiased estimate of optimal n (but still an optimistically biased estimate of true error)

Batch Normalization

Key idea: add batch normalization layers to network. For each minibatch, BN layer scales each feature to have mean 0, variance 1. Then adds an offset β and scaling γ parameter to train.

Impact of Batch Normalization on MNIST net:

[Quora]

Batch normalization potentially helps in two ways: faster learning and higher overall accuracy. The improved method also allows you to use a higher learning rate, potentially providing another boost in speed. A detailed intro of BN.

Learning Hidden Layer Representations

[ ]

]

Note that the hidden layer is actually like 3 bit to represent numbers: 1-0-0, 0-0-1, 0-1-0, 1-1-1, 0-0-0, 0-1-1, 1-0-1, 1-1-0.

Note that hidden layer values are not boolean. So it have stronger representative ability .

Learnt Hidden Unit Weights in Face Recognition

Learning pose from face pictures: Link

Learning Distributed Representations for Words

Learning Distributed Representations for Words

- also called “word embeddings”

- word2vec is one commonly used embedding

- based on skip gram model

Key idea: given word sequence w1 w2 … wT train network to predict surrounding words. for each word wt predict wt-2, wt-1, wt+1, wt+2

e.g., “the dog jumped over the fence in order to get to..” — “the cat jumped off the widow ledge in order to ...”

Word2Vec Word Embeddings

Skip-gram Word Embeddings

Learning Representations for Words and Relations

NELL (Never Ending Language Learner) is learning to read the web, building large knowledge graph. Yang & Mitchell, 2017 PDF

[ ]

]

What is cross entropy?

Our negative log likelihood loss is also called cross entropy. Why?

Recurrent Neural Networks

- Many tasks involve sequential data

- predict stock price at time

t+1based on prices att, t-1, t-2, … - translate sentences (word sequences) from Spanish to English

- transcribe speech (sound sequences) to text (word sequences)

- predict stock price at time

- Key idea: recurrent network uses (part of) its state at

tas input fort+1

Note that in the diagram, the weight W is shared by each iteration. (parameter sharing). When using back propagation to calculate the gradient, there will be a problem of vanishing and / or exploding gradients. (0.9 × 0.9 × 0.9 × … × 0.9 ≈ 0)

Gradient Clipping: Managing exploding gradients

Simply clip gradient when they explode.

Bi-directional Recurrent Neural Networks

Key idea: processing of word at position t can depend on following words too, not just preceding words.

[ ]

]

- For the hidden layer units

h, it accepts two inputs, one from inputxand the other from the previous hidden layer unit (representing the previous time). - In the bi-directional RNN,

glayer is added to accept inputs from "future" (the output of nextgunit). - The output

ois decided by bothhandg, containing both the "past" and "future" information.

Deep Bidirectional Recurrent Network

In [Irsoy & Cardie, 2014], Deep Bidirectional Recurrent Network shows better performance in Opinion Mining tasks than shallow RNN.

Long Short Term Memory (LSTM) Unit

In previous RNN, there is a problem that weight vanishing after long steps (e.g. 100 steps later). In this case, the network cannot make use of information happened long ago. LSTM is to solve the problem by adopting several gates for the hidden layer units.

Gated Recurrent Units (GRUs)

Programming Frameworks for Deep Nets

- TensorFlow (Google)

- TFLearn (runs on top of TensorFlow, but simpler to use)

- Theano (University of Montreal)

- Pytorch (Facebook)

- CNTK (Microsoft)

- Keras (can run on top of Theano, CNTK, TensorFlow)

- Caffe (Berkeley AI Research)

Many support use of Graphics Processing Units (GPU’s)