Music Generation Based on Char-RNN

This post is about the result of using Karpathy's method to generate music.

Last week, I read The unreasonalble Effectiveness of Recurrent Neural Networks in Andrej Karpathy's blog, which, impleted Oxfords cs course practice 6's codes.

It's a coincidence that my friends and I in MC-lab collected a music stave dataset for scientific use. So I tried using this dataset to generate two pieces of music.

P.S. The music stave dataset with 300+2,790+200 images will be released after paper accepted.

What is Char-RNN & Why it is impressive

Char-Rnn harnesses LSTM to establish a network that absorbs text sequence material as train set and valid set, trains with the flow of text char by char, and samples by giving a start char which automatically gets the next char by selecting the most possible output char generated by forwarding previous char into network.

This network has two pros, that is, easy to train (cause text materials are easy to be found), and easy to get surprising results (it seems like machine has intelligence).

What's more, RNN shows great potential in sequence processing recently. It can learn the 'rules' in sequence, which make it act like human being, for instance: A Neural Conversational Model, Oriol Vinyals and Quoc V. Le, google. shows LSTM potential in talking.

How to Generate Music

First - Prepare Music Text

The music dataset that we will release later (dataset link will be added in this post, yet the collection of all grandtruth can be download musicGT2099.pdf) includes 300 "Clean", 2,790 "Synthesized", and 200 "Real-World" images of music score.

The grandtruth of dataset is given by manually labelling, ranging from 1~5+ according to the pitch of each musical note.

Remember to change the pdf file into txt file before using it to train the network.

Second - Run Karpathy's Code

Code can be downloaded from github.

Torch7 needs to be setted up, and GPU is NOT a must for training of this scale.

More layers and parameters are needed if you want to train the network with a large sequence txt file (some what like 20M+). Simply change the option -num_layers will be OK.

The whole train and sample process is in detail here by Karpathy.

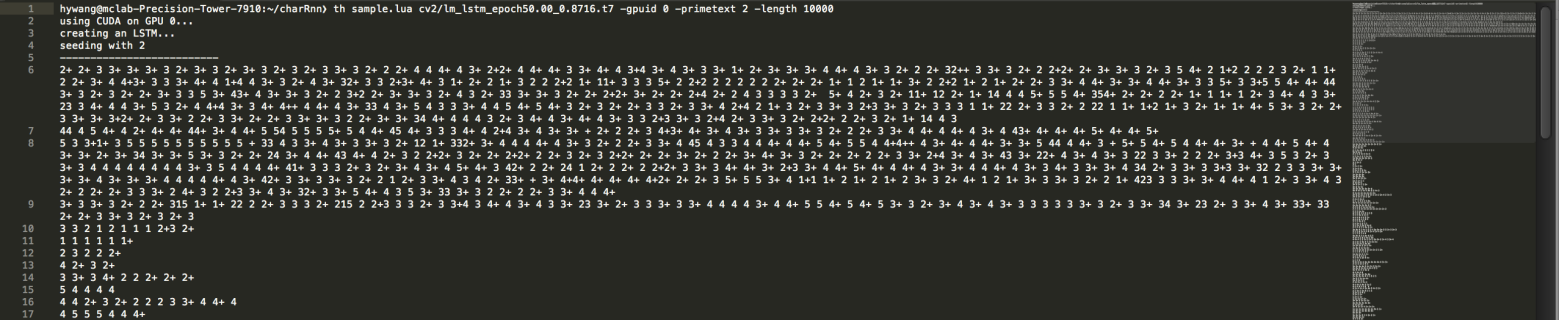

Third - Get Music from the TXT You Sample The sampling results look like the grandtruth we feed in. But some long pieces can be generated, like following:

Finally, Music is generated by manually inputing the pitch into MuseScore2. Scroll down to listen the generated music :)

Result

MuseScore2 can generate pdf of music stave: Generated_Music_Stave.pdf , as well as music:

Further Work

About Music

+ Add pause and duration information of music sheet to get more fluential music.

+ Use the same style music data to train the network, may generates the same style music.

+ Input sound rather than text.

About Other

+ Predict stock trends. (there were similar work about 3 years ago)

+ Generate literature works like poems, novels, etc.

+ Explore cipher code. (output is valid when 'temprature' is close to zero)

Added on 2015.7.24 about James Blaha's work

Guitar Music Generation By James Blaha

Recently when I incidentally review Karpathy's blog, I found there already exists a comment about music generating by James Blaha.

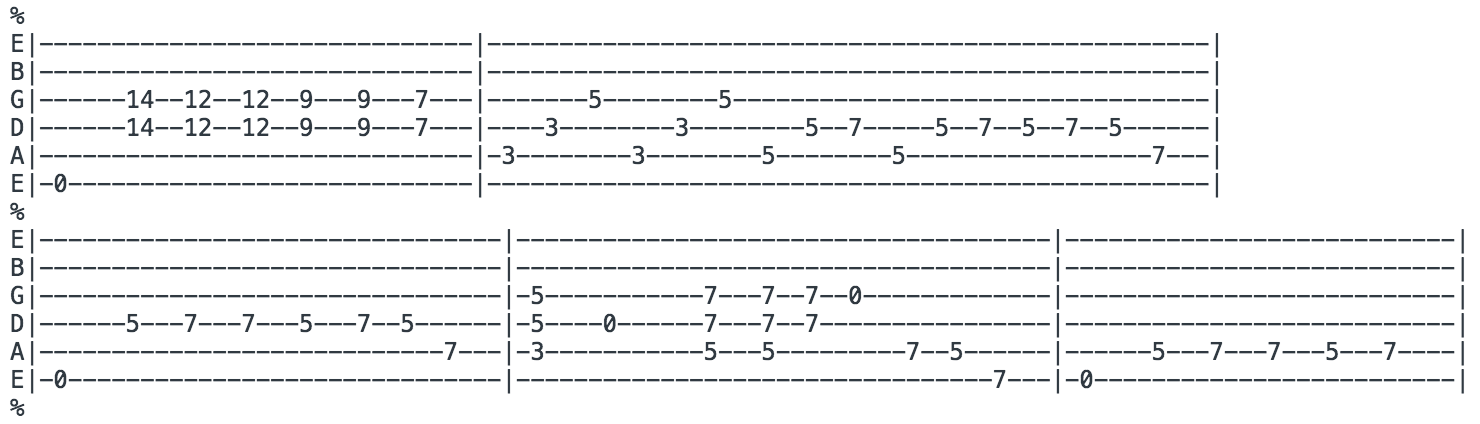

The form of his inputs is like this:

The inputs are famous guitar tabs (about 500mb) in ASCII. Then the outputs are sent into GuitarPro to generate music.

Listen to his work here.

His Dataset, trained models can be download here.